These are all tree-based ensemble methods in machine learning. They combine multiple decision trees to create a more powerful predictive model. Let’s go step by step:

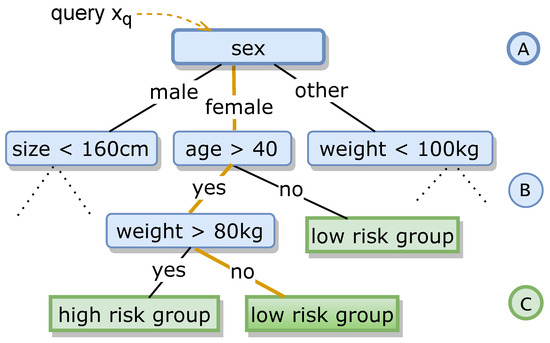

🌳 Decision Trees (the building block)

A decision tree splits data into groups based on feature thresholds (e.g., “Is age > 40?”).

They are:

- Easy to interpret

- Handle both numerical and categorical data

- But: prone to overfitting and instability (small changes in data can change the tree a lot).

Ensemble methods solve these issues by combining many trees.

1. Random Forests (RF)

- Type: Bagging ensemble of decision trees.

- How it works:

- Build many decision trees on bootstrapped (randomly resampled) subsets of the data.

- At each split, only a random subset of features is considered.

- Predictions are averaged (regression) or voted (classification).

- Strengths:

- Reduces variance (overfitting).

- Works well with minimal tuning.

- Robust to noise and outliers.

- Weaknesses:

- Large models, slower predictions than a single tree.

- Less interpretable.

Think of it as a committee of trees that vote independently.

2. Gradient Boosted Trees (GBT)

- Type: Boosting ensemble of decision trees.

- How it works:

- Trees are built sequentially.

- Each new tree tries to correct errors made by the previous trees, using gradient descent to minimize loss.

- Final prediction = weighted sum of all trees.

- Strengths:

- Usually more accurate than Random Forests.

- Can optimize arbitrary loss functions.

- Weaknesses:

- More sensitive to hyperparameters (learning rate, depth).

- Slower to train than Random Forests.

Think of it as a series of trees where each one fixes the mistakes of the last.

3. XGBoost (Extreme Gradient Boosting)

- An optimized implementation of gradient boosting.

- Key innovations:

- Second-order gradient optimization (uses both gradient and curvature).

- Regularization (helps prevent overfitting).

- Parallelization for speed.

- Often a go-to model in Kaggle competitions.

4. LightGBM (by Microsoft)

- Another optimized gradient boosting library.

- Key innovations:

- Leaf-wise tree growth (instead of level-wise) → deeper, more accurate splits.

- Histogram-based splits → faster and memory efficient.

- Handles very large datasets with high-dimensional features well.

- Typically faster than XGBoost on large datasets.

5. CatBoost (by Yandex)

- Gradient boosting with a focus on categorical features.

- Key innovations:

- Handles categorical variables natively (no need for one-hot encoding).

- Uses ordered boosting to reduce overfitting.

- Strong performance out-of-the-box with minimal tuning.

🔑 Summary Comparison

| Model | Type | Strengths | Best Use Cases |

|---|---|---|---|

| Random Forest | Bagging | Robust, simple, less tuning | General-purpose, baseline model |

| Gradient Boosting | Boosting | High accuracy, flexible loss | When accuracy matters most |

| XGBoost | GBT impl. | Regularization, fast, proven | Kaggle, tabular ML |

| LightGBM | GBT impl. | Very fast, large datasets | High-dimensional / big data |

| CatBoost | GBT impl. | Native categorical handling | Mixed data with categories |

👉 In practice:

- Start with Random Forest for a baseline.

- Try LightGBM/XGBoost/CatBoost if you want top performance on structured/tabular data.