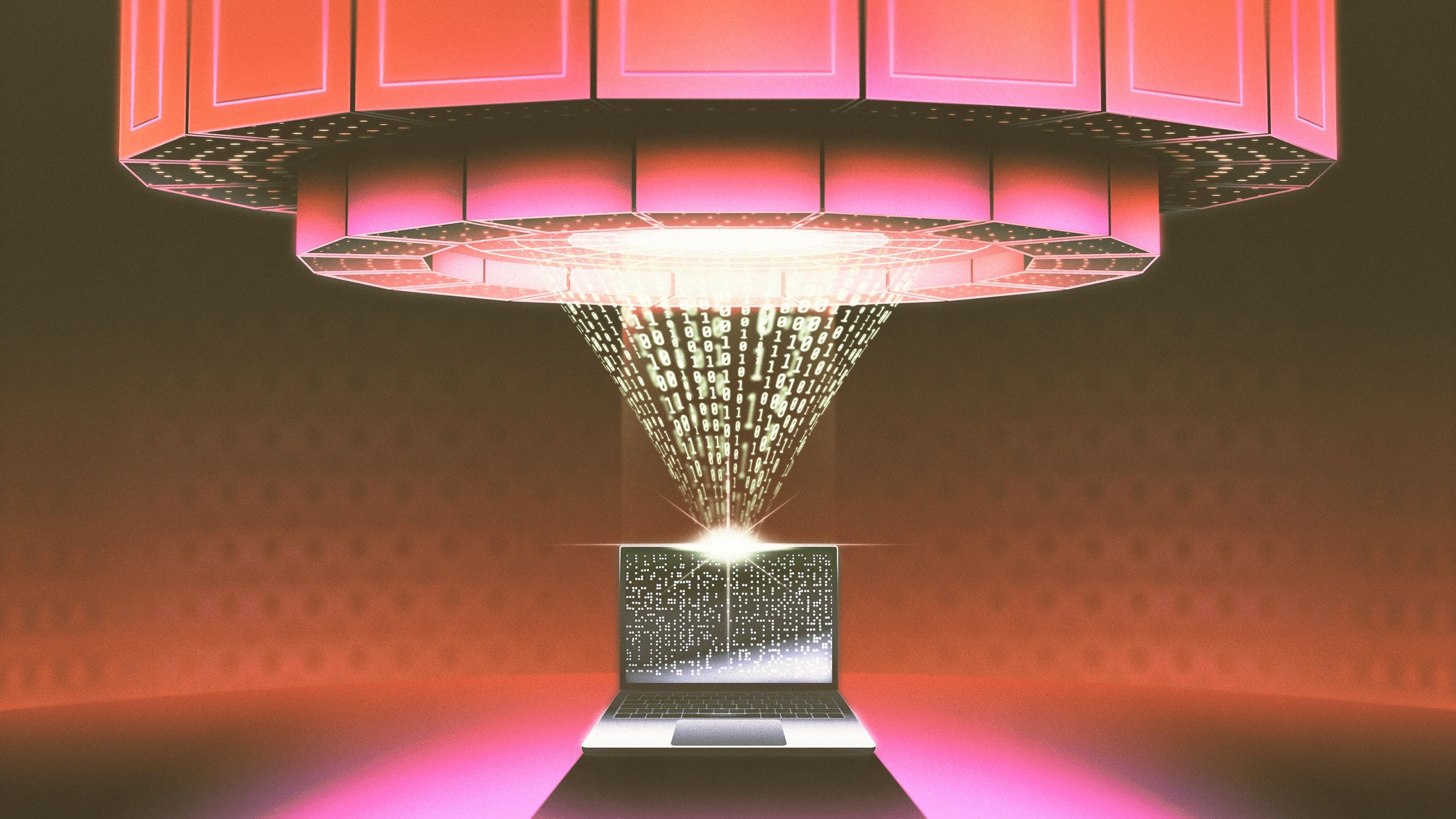

A fundamental technique lets researchers use a big, expensive model to train another model for less.A fundamental technique lets researchers use a big, expensive model to train another model for less.

Distillation Can Make AI Models Smaller and Cheaper from Wired Amos Zeeberg